Provider Backbone Bridging (PBB)

As

you can see, I'm writing about provider architectures lately. Last

week, I wrote about Bridging

and Provider Bridging where you can

read that the traditional bridging is not enough today for large

networks because service provider and cloud companies need more than

4096 VLAN. Therefore, Provider Bridging is an option because we can

address till 16 millions of networks with double VLAN tags. However,

Provider Bridging is not scalable due to

the fact that it needs big and expensive TCAM because customer MAC

addresses are saved by every router, even core and spine routers. As

a result, Provider Backbone Bridging (PBB)

is here to solve this problem.

Provider

Backbone Bridging is the standard 802.1.ah by IEEE and the main

different with regard to Provider Bridging is

the encapsulation method to hide customer MAC addresses to the

backbone instead of doubling tags. This is a great advantage because

we don't need expensive routers in the

backbone with big content-addressable memories (TCAM) for large

networks but spine and core routers speaking PBB. Therefore, inner

(customer) MAC addresses are encapsulated within

outer (Provider) MAC addresses which is useful for hiding customer

frames to the backbone.

|

| Bridges, VLANs, Provider Bridges and Provider Backbone Bridges |

The

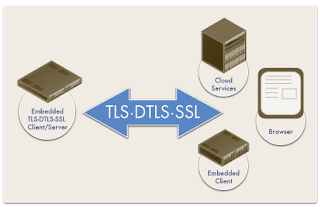

encapsulation technique is also called Overlay and

there are many technologies today that use this method to hide frames

and interconnect layer 2 networks. For

example, traditional VPNs are Network Overlays like OTV, VPLS

or LISP; We can configure Host Overlays as well like VXLAN, NVGRE or

STT; or even we can have a mix to make Hybrid Overlays. Anyway,

it's a good way to make

simple

and scalable networks without worrying about the underlying network

because Overlay Networks allow us to change, manage and deploy new

technologies quickly, although, sometimes, the architecture could

seem more complex and difficult to manage.

|

| Overlay Networks |

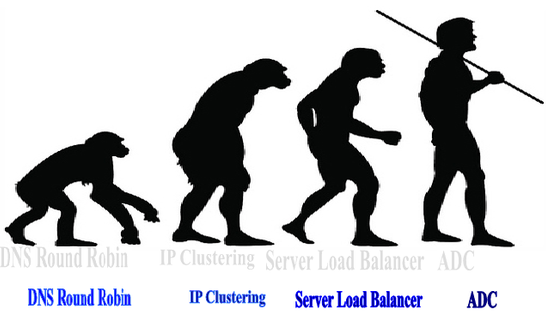

PBB

implements intelligent bridging which is useful for layer 2

multipathing. Therefore,

while traditional networks had limited performance due to the fact

that traffic was North-South for web content, email, etc, flat

networks like CLOS networks, along with PBB, are more manageable and

scalable thanks to East-West alignment, which

offer better performance and reliability for server to server

communication, useful for cloud computing and

hadoop

architectures.

|

| North-South and East-West traffic |

Multipathing

is a great feature for CLOS networks but although PBB uses

encapsulation in the data plane for hiding customer frames, it may

use Spanning Tree in the control plane for loop avoidance. This is a

big problem, I mean, if we use Spanning Tree (STP) in the control

plane, we'll have the same inherent problems than STP; nothing about

layer 2 multipathing, scalability problems, convergence delays,

North-South traffic, etc. Consequently, we can implement PBB-TE,

which is the 802.1Qay standard, PBB-EVPN over MPLS networks or even

SPB, which 802.1aq standard, for better performance, reliability and

real layer 2 multipathing.

Regards

my friends, this is going too fast, keep

studying my friend!

Commentaires

Enregistrer un commentaire